Memory Pools in Android 11

Last Updated :

02 Feb, 2023

Memory pools are a concept that was released in Android 11, with several advantages and providing a cross-linkage between the devices framework and the drivers. In this Geeks for Geeks we will be looking at what memory pools are, and how they differ from the memory profiler. The framework gives the driver the constant operand values at model compilation time. The values of the constant operand are either stored in a shared memory pool or a HIDL vector, depending on the lifespan of the operand.

How is Memory Pools different from Memory Profiler

- The values are found in the model structure’s operandValues field if the lifetime is CONSTANT_COPY. The HIDL vector is normally only used to carry a limited amount of data, such as scalar operands (for example, the activation scalar in ADD) and short tensor parameters because the values in IPC are copied during interprocess communication (IPC) (for example, the shape tensor in RESHAPE).

- The values are found in the model structure’s pools field if the lifetime is CONSTANT_REFERENCE. During IPC, just the shared memory pools’ handles are copied, not their actual contents. As a result, shared memory pools are more effective at storing a huge quantity of data than HIDL vectors, such as the weight parameters in convolutions.

How the data is driven to the driver

The framework gives the driver the input and output operand buffers at the moment the model is being executed. Contrary to compile-time constants that could be supplied in a HIDL vector, an execution’s input and output data are always transmitted over a network of memory pools.

Both during compilation and during execution, an unmapped shared memory pool is represented by the HIDL data type HIDL memory. Based on the name of the HIDL memory data type, the driver should map the memory to make it useful. The names of the supported memory are:

- ashmem: Shared memory for Android. See memory for further details.

- Hardware buffer blob: Shared memory backed by an AHardwareBuffer with the format A_HARDWARE_BUFFER_FORMAT

- BLOB MMAP FD: Shared memory backed by a file descriptor through mmap.

- Neural Networks (NN) HAL 1.2 is available. Hardware buffer: Shared memory supported by a general AHardwareBuffer that does not employ the format A_HARDWARE BUFFER_FORMAT_BLOB.

GeekTip #1: Memory name mapping for ashmem and MMAP_FD must be supported by NNAPI drivers. Drivers are required to allow mapping of hardware buffer blob starting with NN HAL 1.3. Hardware buffer and memory domain support for general non-BLOB mode are optional.

AHardwareBuffer

A Gralloc buffer is wrapped in a shared memory structure called an AHardwareBuffer. The Neural Networks API (NNAPI) in Android 10 supports leveraging AHardwareBuffer, which enables the driver to execute operations without transferring data, improving app speed and power usage. For instance, a camera HAL stack can use AHardwareBuffer handles produced by the camera NDK and media NDK APIs to deliver AHardwareBuffer objects to the NNAPI for machine learning workloads. The driver must correctly decode the supplied HIDL handle field in order to access the memory it describes. The driver should determine if it can decode the supplied HIDL handle and access the memory represented by the HIDL handle when the getSupportedOperations 1 2, getSupportedOperations 1 1, or getSupportedOperations method is invoked.

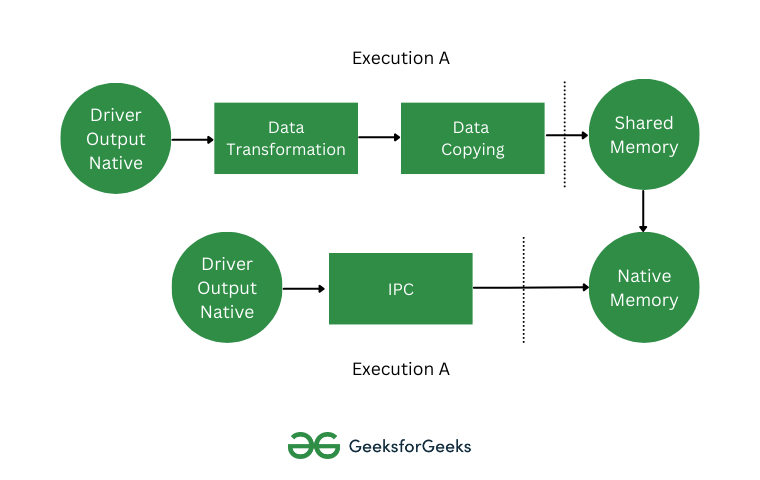

Image #1: Data Flow in Memory Pools

Memory spheres

NNAPI supports memory domains that offer allocator interfaces for driver-managed buffers on devices running Android 11 or higher. As a result, needless data copying and transformation between subsequent executions of the same driver are suppressed and device native memories can be passed between executions of the driver. Tensors that are primarily internal to the driver and don’t require frequent client access are the target audience for the memory domain feature. The state tensors in sequence models are an example of such tensors. Shared memory pools are the best choice for tensors that demand frequent client-side CPU access.

Implement Device::allocate to enable the framework to request driver-managed buffer allocation in order to provide the memory domain functionality. The allocation process includes the following features and usage patterns for the buffer from the framework:

- The necessary buffer attributes are described by BufferDesc.

- The probable pattern of use for the buffer as an input or output of a built-in model is described by BufferRole. The allocated buffer may have many roles specified during allocation, and those roles alone may be used for that buffer.

GeekTip #2: The buffer’s content may either be left in an undetermined condition or the driver may return an error.

How Buffer Works

Explicit memory copying is done with the help of the Buffer object. In some circumstances, the driver’s client must copy the driver-managed buffer into a shared memory pool or initialize it from one. Examples of use cases are:

- Storing intermediary outcomes

- Establishing the state tensor

- CPU fallback execution

Determining the Buffer Dimensions

The dimensions of the buffer can be determined during buffer allocation by looking up the model operands for each role listed in BufferRole and the dimensions listed in BufferDesc. The buffer’s dimensions or rank could be unknown once all the dimensional information has been combined. When used as a model input, the buffer is in a flexible state with fixed dimensions, and when used as a model output, it is in a dynamic state. The same buffer can be utilized with various output forms in various executions, and the driver must appropriately handle buffer resizing.

Conclusion

The driver-managed buffer may be simultaneously read from by a number of distinct threads. While simultaneous write and read/write access to the buffer is undefined, it must not result in a driver service crash or an indefinite caller block.

Like Article

Suggest improvement

Share your thoughts in the comments

Please Login to comment...