Multithreading and Latency Hiding

Last Updated :

17 May, 2023

In computer architecture, multithreading is that the ability of a central processing unit (CPU) (or one core during a multi-core processor) to supply multiple threads of execution concurrently, supported by the operating system. Latency encryption enhances machine usage by enabling it to perform useful functions.

Multithreading is that the ability of a program or a software process to manage its use by quite one user at a time and to even manage multiple requests by the identical user without having to possess multiple copies of the programming running within the computer. Multithreading is one way to implement latency hiding.

- The general idea of latency hiding methods is to produce each processor with some useful work to try to because it waits for remote operation requests to be satisfied.

- Latency hiding: Provide each processor with useful work to do as it awaits the completion of memory access requests.

- Latency hiding provide permission of communications to be completely overlapped with computation, leading to high efficiency and hardware utilization.

- Multithreading may be a practical mechanism for latency.

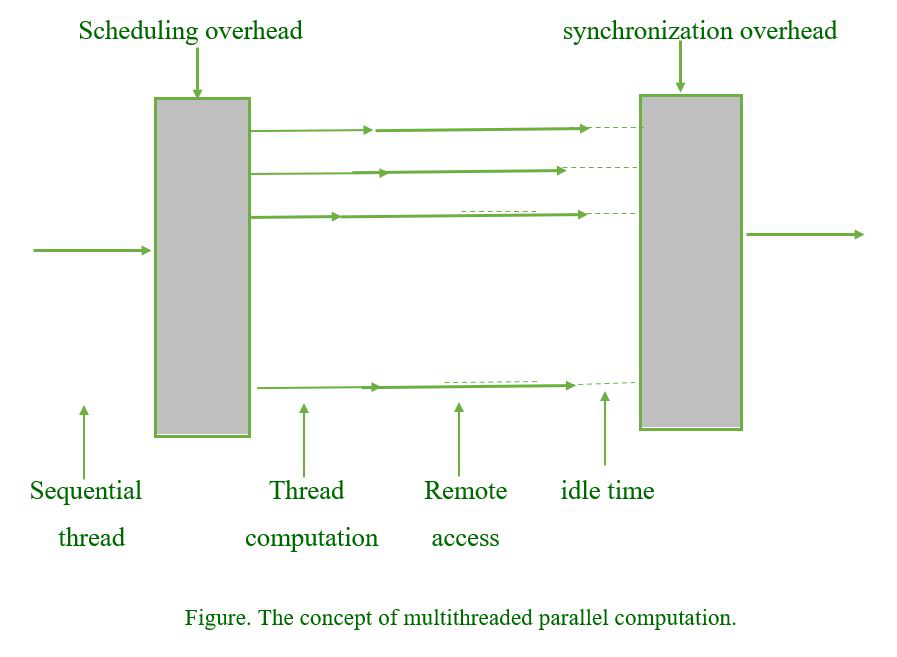

Multithreading is a useful mechanism for reducing latency. A multithreaded computation typically begins with a sequential thread, then some supervisory overhead to set up (schedule) various independent threads, then computation and communication (remote accesses) for individual threads, and finally a synchronization step to terminate the threads before starting the next unit.

Sequential thread:

Within a programme, a thread is a single sequential flow of control. The real buzz about threads isn’t about a single sequential thread. Rather, it refers to the use of multiple threads that run concurrently and perform different tasks in a single programme.

Scheduling overhead:

The number of times the scheduling algorithm runs to determine which process to run is referred to as scheduling overhead. It is an overhead in a multiprogramming operating system because we have processes to run but must also decide which process to run.

Remote memory access:

Remote direct memory access (RDMA) in computing is a direct memory access from one computer’s memory to that of another without involving either computer’s operating system. This enables high-throughput, low-latency networking, which is particularly beneficial in massively parallel computer clusters.

Idle time:

The ideal time is the time it would take to complete a given task if there were no interruptions or unplanned problems. When planning and estimating, many time-based estimation methods make use of this timescale.

Synchronization overhead:

Synchronization overhead is defined as the amount of time one task spends waiting for another. Tasks may synchronize at an explicit barrier where they all finish a timestep. Time spent waiting for other tasks wastes the core on which that task is working. This period of waiting is referred to as synchronization overhead.

It should be noted that the use of multithreading is not limited to parallel processing environments, but also provides benefits on a single processor. For improved performance, a uniprocessor with multiple independent threads to execute can switch between them on each cache miss, every load, after each instruction, or after each fixed-size block of instructions. The processor may need multiple register sets (to reduce the context switching overhead) and a larger cache memory (to hold recently referenced data for several threads), but the benefits can outweigh the costs. Hirata et al. [Hira92] claims speedups of 2.0, 3.7, and 5.8 in a processor with nine functional units and two, four, or eight independent threads, respectively.

Multithreading for latency hiding: example

- In the code, has the main(primary) instance of this function accesses a pair of vector elements and waits for them.

- In the meantime, the second instance of this function can access two other vector elements within the next cycle, and so on.

- After l units of your time, where l is that the latency of the memory system, the primary function instance gets the requested data from memory and might perform the specified computation.

- In the following cycle, the information items for the following function instance arrive, and so on. During this way, in every clock cycle, we are able to perform a computation.

- The execution schedule within the previous example is based upon two assumptions: the memory system is capable of servicing multiple outstanding requests, and therefore the processor is capable of switching threads at every cycle.

- It also requires the program to own a certain specification of concurrency within the sort of threads.

- Machines like the HEP and Tera depend upon multithreaded processors which will switch the context of execution in every cycle. Consequently, they’re ready to hide latency effectively.

Advantages of Multithreading

- Increased throughput. A single process can handle a large number of concurrent compute operations and I/O requests.

- Use of multiple processors for computation and I/O at the same time and in a fully symmetric manner.

- Excellent application responsiveness. Applications do not freeze or display the “hourglass” if a request can be launched on its own thread.

- Improved server responsiveness. Large or complex requests or slow clients don’t block other requests for service

- Minimized system resource usage. Threads impose minimal impact on system resources.

- Threads require less overhead to create, maintain, and manage than a traditional process.

Disadvantages of multithreading

Multithreading also has some common disadvantages:

- Processes for debugging and testing that are difficult to understand.

- The outcome is sometimes unpredictable.

- Context switching with overhead.

- There is a greater chance of a deadlock occurring.

- The level of difficulty in writing a programme has increased.

- In general, complexity has increased.

- Shared resource synchronization (objects, data)

- Potential deadlocks “starvation”: some threads may not be served if the design is flawed.

- Thread construction and synchronization consumes a lot of CPU/memory.

Comparison of Multithreading and Latency Hiding

| Criteria |

Multithreading |

Latency Hiding |

| Definition |

Ability of a CPU or software process to execute multiple threads |

Technique of providing each processor with useful work as it waits for requests |

| Mechanism |

Managing multiple requests by a single copy of the program |

Overlapping communication with computation for high efficiency

|

| Advantages |

Increased throughput, symmetric use of processors, improved response |

Improved machine usage, high efficiency, and hardware utilization |

| Disadvantages |

Difficult debugging, unpredictable outcomes, context switching |

Increased complexity, shared resource synchronization, and potential deadlocks |

| Overhead |

Less overhead than traditional processes |

Requires synchronization overhead to terminate threads |

| Examples |

HEP and Tera |

Remote direct memory access (RDMA) |

| Performance improvement |

Speedup of 2.0-5.8 on a single processor with multiple independent threads |

Effective hiding of latency |

Note: Latency hiding is a technique that can be implemented using multithreading, but it is not limited to it. It is a general technique for improving machine usage.

Refer for Multi threading models, benefits of Multithreading, and difference between multitasking, multithreading and multiprocessing.

Like Article

Suggest improvement

Share your thoughts in the comments

Please Login to comment...