Proofs and Inferences in Proving Propositional Theorem

Last Updated :

28 Feb, 2022

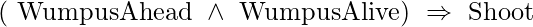

This article discusses how to use inference rules to create proof—a series of conclusions that leads to the desired result. The most well-known rule is known as Modus Ponens (Latin for affirming mode) and is expressed as

Inferences in Proving Propositional Theorem

The notation signifies that the sentence may be deduced whenever any sentences of the type are supplied. If  and

and  are both supplied, Shoot may be deduced.

are both supplied, Shoot may be deduced.

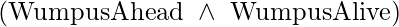

And-Elimination is another helpful inference rule, which states that any of the conjuncts can be inferred from conjunction:

WumpusAlive can be deduced from  , for example. One may readily demonstrate that Modus Ponens and And-Elimination are sound once and for all by evaluating the potential truth values of

, for example. One may readily demonstrate that Modus Ponens and And-Elimination are sound once and for all by evaluating the potential truth values of  and

and  . These principles may then be applied to each situation in which they apply, resulting in good conclusions without the necessity of enumerating models.

. These principles may then be applied to each situation in which they apply, resulting in good conclusions without the necessity of enumerating models.

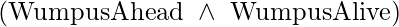

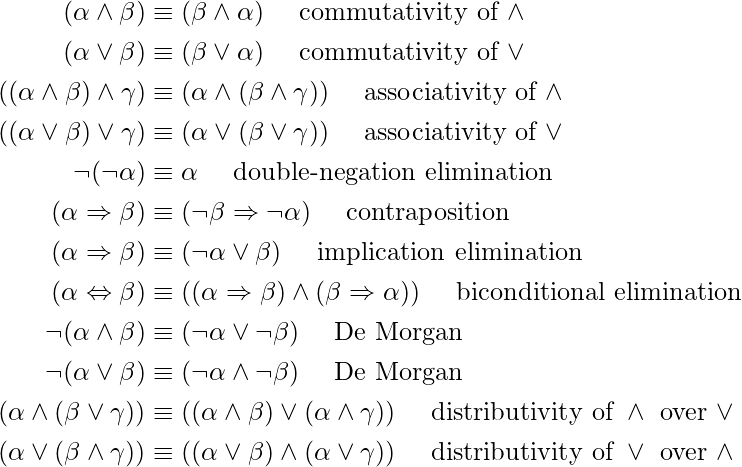

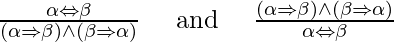

The equations above show all of the logical equivalences that can be utilized as inference rules. The equivalence for biconditional elimination, for example, produces the two inference rules.

Some inference rules do not function in both directions in the same way. We can’t, for example, run Modus Ponens in the reverse direction to get  and

and  .

.

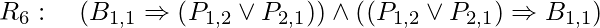

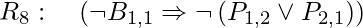

Let’s look at how these equivalences and inference rules may be applied in the wumpus environment. We begin with the knowledge base including R1 through R5 and demonstrate how to establish  i.e. that [1,2] does not include any pits. To generate R6, we first apply biconditional elimination to R2:

i.e. that [1,2] does not include any pits. To generate R6, we first apply biconditional elimination to R2:

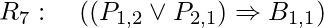

After that, we apply And-Elimination on R6 to get

For contrapositives, logical equivalence yields

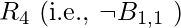

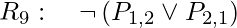

With R8 and the percept  , we can now apply Modus Ponens to get

, we can now apply Modus Ponens to get  .

.

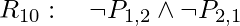

Finally, we use De Morgan’s rule to arrive at the following conclusion:

That is to say, neither [1,2] nor [2,1] have a pit in them.

We found this proof by hand, but any of the search techniques may be used to produce a proof-like sequence of steps. All we have to do now is define a proof problem:

- Initial State: the starting point for knowledge.

- Actions: the set of actions is made up of all the inference rules that have been applied to all the sentences that fit the inference rule’s upper half.

- Consequences: Adding the statement to the bottom part of the inference rule is the result of an action.

- Objective: The objective is to arrive at a state that contains the phrase we are attempting to verify.

As a result, looking for proofs is a viable alternative to counting models.

Like Article

Suggest improvement

Share your thoughts in the comments

Please Login to comment...