Amazon EKS is a managed Kubernetes service, whereas autoscaling is a key feature of Kubernetes, where the number of pods will automatically scale up or scale down based on the traffic the application receives. In this guide, I have first discussed what Amazon EKS is. Then I discussed the autoscaling feature. After this, I have walked you through the different steps to implement autoscaling on an Amazon EKS cluster.

What is Amazon EKS?

Amazon Elastic Kubernetes Service (EKS) is a fully managed Kubernetes service provided by the AWS cloud platform. It enables easy deployment, scaling, and management of containerized applications on the AWS cloud platform. It abstracts many complexities in managing the cluster and server provisioning, which helps users focus only on developing and running their applications without worrying about any infrastructure setup. Developers can become more productive.

Amazon EKS provides several features, such as:

- Cluster Management: Amazon EKS provides a fully managed control plane and fully managed node groups.

- High Availability: Amazon EKS distributes the control plane over multiple availability zones, which ensures high availability.

- Monitoring: To monitor the Amazon EKS cluster, AWS CloudWatch and AWS CloudTrail can be used.

- Network Management: EKS handles the network and security configuration by using service discovery and IAM authentication.

- Eksctl: This Eksctl tool helps in creating and controlling EKS clusters.

What is Autoscaling?

Autoscaling means automatically adjusting the resources and virtual servers based on the amount of traffic the application receives. It is one of the key features provided by Kubernetes. In Kubernetes, the number of pods will automatically scale up or down based on traffic. The key goal of autoscaling is to handle a large number of requests while maintaining cost efficiency.

There are two types of autoscaling:

Horizontal Autoscaling: Horizontal autoscaling means creating multiple types of virtual servers or pods to handle large requests. Here, the overall traffic is distributed over the different pods or virtual servers. Horizontal autoscaling requires high maintenance, but it will also provide zero downtime. It has high performance and a high cost, too.

Vertical Autoscaling: Vertical Autoscaling is to adjust the capacity like CPU, memory, or storage. Here the single node handles all the requests. Vertical autoscaling provides a low performance. It also costs less. It faces a high risk of entire system failure as only one node is used. Also vertical autoscaling does not guarantee to give zero downtime.

Steps To Implement Autoscaling In Amazon EKS

Step 1: Create an EC2 instance.

Step 2: Connect the EC2 instance and install Kubectl, AWS CLI and eksctl.

curl "https://awscli.amazonaws.com/awscli-exe-linux-x86_64.zip" -o "awscliv2.zip"

sudo apt install unzip

unzip awscliv2.zip

sudo ./aws/install -i /usr/local/aws-cli -b /usr/local/bin --update

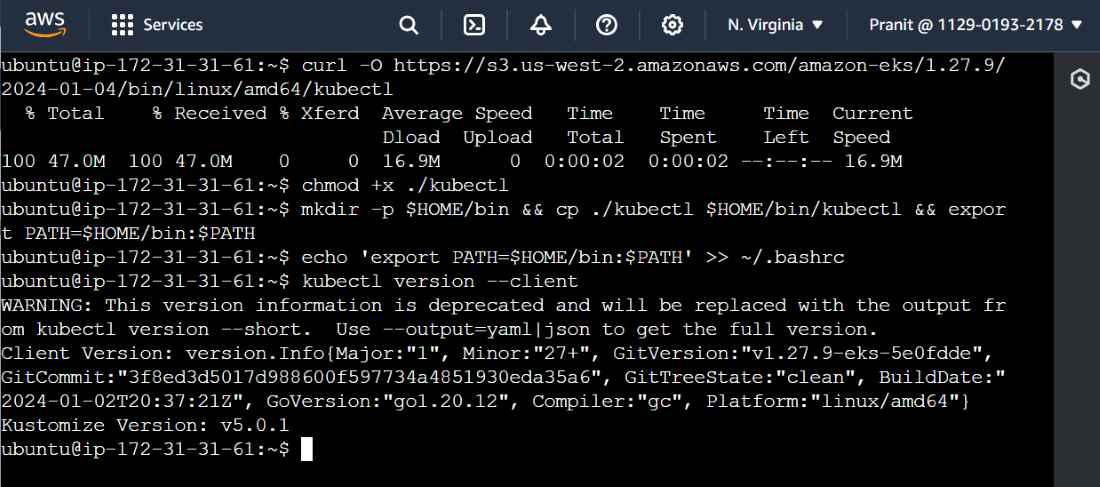

curl -O https://s3.us-west-2.amazonaws.com/amazon-eks/1.27.9/2024-01-04/bin/linux/amd64/kubectl

chmod +x ./kubectl

mkdir -p $HOME/bin && cp ./kubectl $HOME/bin/kubectl && export PATH=$HOME/bin:$PATH

echo 'export PATH=$HOME/bin:$PATH' >> ~/.bashrc

kubectl version --client

curl --silent --location "https://github.com/weaveworks/eksctl/releases/latest/download/eksctl_$(uname -s)_amd64.tar.gz" | tar xz -C /tmp

sudo mv /tmp/eksctl /usr/local/bin

eksctl version

Step 3: Create an IAM user having admin access.

Step 4: Then create the security credential for AWS CLI access using the IAM user that is created in step 2.

Then configure the AWS CLI on the EC2 instance. Here paste the Access and Secret keys to configure AWS CLI.

aws configure

Step 5: Now create an EKS cluster by using the command below.

eksctl create cluster --name gfg-cluster --region us-east-1 --node-type t3.small --nodes-min 1 --nodes-max 2

.png)

Step 6: Now create a deployment YAML configuration file.

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx

labels:

app: nginx

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest

ports:

- containerPort: 80

resources:

limits:

cpu: 500m

requests:

cpu: 200m

Step 7: Then create a service YAML configurational file.

apiVersion: v1

kind: Service

metadata:

name: nginx-service

spec:

type: NodePort

selector:

app: nginx

ports:

- port: 80

targetPort: 80

Step 8: Configure the metrics server . Here first download the metric server YAML files.

https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yaml

Now make some changes in the metrics server YAML file . Here first go line 140 . Then paste the below code to make it work properly .

command:

- /metrics-server

- --kubelet-insecure-tls

- --kubelet-preferred-address-types=InternalIP

Step 9: Create a horizontal pod autoscaler YAML configurational file.

apiVersion: autoscaling/v1

kind: HorizontalPodAutoscaler

metadata:

name: nginx

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: nginx

minReplicas: 1

maxReplicas: 10

targetCPUUtilizationPercentage: 20

Step 10: Apply deployment, service and horizontal pod autoscaler YAML files using the command below.

kubectl apply -f ./

Step 11: Now increase the load on the URL.

kubectl run -i --tty load-generator --image=busybox -- sh -c "while true; do wget -O - http://nginx-service:80; done"

Step 12: Now observe the number of pods increase , on the traffic increases.

kubectl get hpa -w

Here you can observe that the number of replicas increased from 1 to 2 after applying the command in step 12.

Conclusion

Here in this article you have first learned what is Amazon EKS service . Then you have learned what is autoscaling and why it is necessary . After this you have created an EC2 instance and installed kubectl , AWS CLI and eksctl . Then you have created an EKS cluster and deployed a demo nginx app on it using horizontal pod autoscaler .

Implementing Autoscaling In Amazon EKS – FAQ’s

What are different types of autoscaling ?

There are two types of autoscaling , that is horizontal autoscaling and vertical autoscaling.

What is node group ?

Node group is logical grouping of worker nodes (EC2 Instances) on the Amazon EKS cluster.

What are the different benefits users get by using Amazon EKS ?

Amazon EKS provides high availability , automatic scaling , secure and reliable platform to run Kubernetes on AWS cloud platform.

Is Amazon EKS comes under free tier ?

No , Amazon EKS does not comes under free tier.

How to monitor Amazon EKS clusters ?

You can monitor Amazon EKS cluster by using AWS CloudWatch service.

Share your thoughts in the comments

Please Login to comment...